This is an English translation of

It's mostly powered by DeepL, so don't count too much on the English.

Goals

While a normal camera extracts the brightness and color of an object, a distance sensor senses the distance to an object. This is why they are sometimes called 3D cameras or depth sensors.

Knowing the distance is important in a variety of applications, for example, in automated driving, it is essential to know the exact distance to the vehicle in front. In games and other applications, Kinect, which uses distance sensors to extract human movements, has expanded the range of games. Distance sensors are also important for surgical robots such as DaVinci to know the exact distance to the affected area.

It is difficult to visualize the dimensions and distance of a room in a photograph or floor plan, but 3D mapping allows you to map the room itself. ! image.png

This kind of mapping can be easily done with the new iPhone Pro using apps like Polycam, so if you have the device, give it a try!

And the age of 3D scanners in our pockets has begun! The iPhone 12 version of Polycam is now out on the App Store https://t.co/Oih4jQTTEc! Go forth and capture your world 🚀🎥! #iPhone12Pro #iPhone12ProMax pic.twitter.com/JY8LAEi5xk

— polycam (@PolycamAI) 2020年10月23日

This article lists the major types of distance cameras in the world, their overview, features, and products used.

The goal is to help you choose the right distance sensor for your R&D project, for example. I'm a LiDAR expert, so I'm not very familiar with camera-based methods, but I've also described the most frequently used distance sensors: stereo cameras and projection cameras.

You can also read more about point cloud deep learning here: aru47.hatenablog.com

Stereo Cameras

Overview

This is a distance sensor that is widely available on the market that is used in e.g. Subaru EyeSight and other industrial products.

This WhitePaper from ensenso is also a good reference.

Obtaining Depth Information from Stereo Images Vision.pdf)

The principle is the same as how we see things in three dimensions.

If you close your eyes alternately to the left and right and look at the monitor, do you see the shift from left to right?

The brain perceives the object with the larger shift as being closer and the object with the smaller shift as being farther away. This is the principle behind stereo cameras, and it can be said that the distance sensors that we use are also based on stereo cameras**.

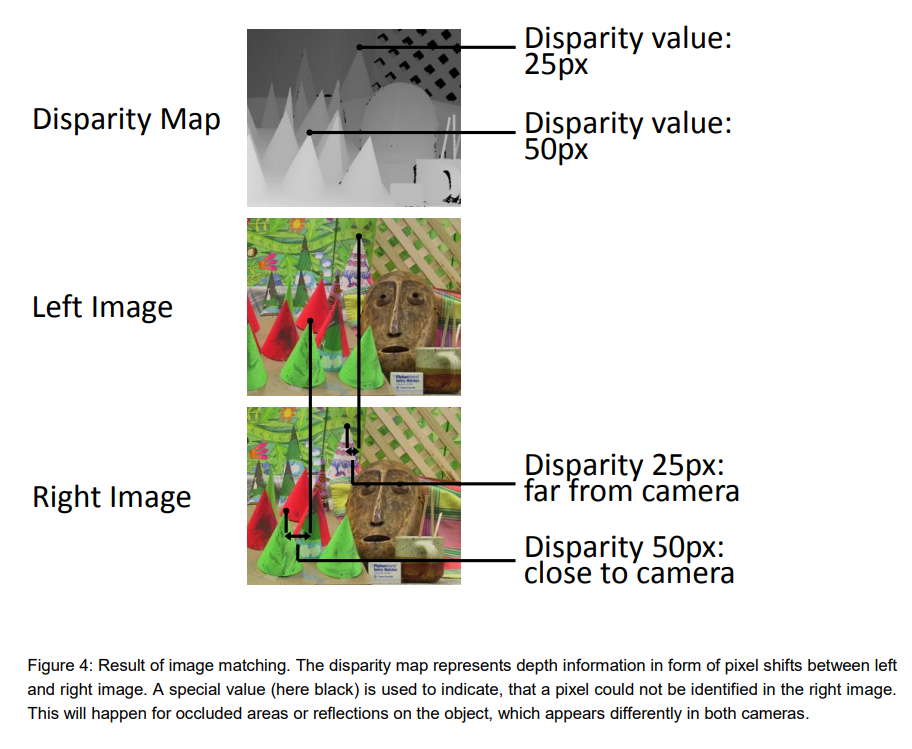

A stereo camera can measure the distance to an object based on how far the pixels have shifted. The farther apart the cameras are, the greater the pixel shift relative to the object, and the more accurate the distance. On the other hand, the closer the cameras are to each other, the more difficult it is to measure the distance because there is almost no pixel shift. Increasing the number of pixels will, in principle, increase the range, but the downside is that the amount of signal processing increases exponentially.

The biggest challenge for stereo cameras is to determine if the two cameras are looking at the same object. This requires advanced image processing techniques and makes it very difficult to guess whether distant objects are really identical or not.

Reference: Obtaining Depth Information from Stereo Images

In addition, there must be no difference in height between the left and right images in order to adapt stereo vision. In order to adapt stereo vision, there must be no height difference between the left and right images, so pre-processing is required to remove camera distortion and calibrate (rectify) the height difference.

Features

While other distance sensors require special components (such as lasers), stereo cameras can be realized by using only two ordinary commercial cameras.

However, in order to measure the distance, the left and right cameras need to see (recognize) the same location of the same object, and it is difficult to measure the distance of distant objects. The disadvantage is that the housing becomes larger if you want to see farther**, because parallax is not created for distant objects unless the camera is set farther away like EyeSight, although the mathematical formula is omitted.

Products used.

1台の PC に4種類の RGB-D カメラをつないで同時キャプチャしてみた。左上 DepthSense DS325、右上 Kinect v2、左下 Kinect v1、右下 RealSense D435。こけて倒したのは D415 (今回は不使用)。 pic.twitter.com/n5h4P2MPZO

— 床井浩平 (@tokoik) 2019年5月30日

For commercial products, Intel's RealSense** comes with software (SDK) and is easy to try.

The RealsenseD435 can be purchased on Amazon for 24,000 yen.

Here is an example of the RealsenseD435 output.

- High accuracy can be obtained at short distances (1-3m).

- High resolution because it is camera based.

- Real-time (30FPS) operation even on a CPU

These are the features of the D435. I think it is the best choice for distance sensors for hobby use.

The RealsenseD400 series also has pattern projection, but it is mostly a stereo camera that is used for distance estimation (even if the projection is turned off, the picture remains almost the same, so it is just a supplementary function).

Pattern Projection Cameras

Overview

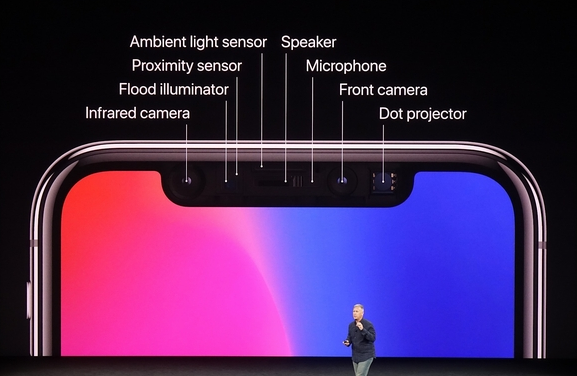

Pattern projection cameras are used in high-performance 3D cameras for industrial applications and iPhone FaceID.

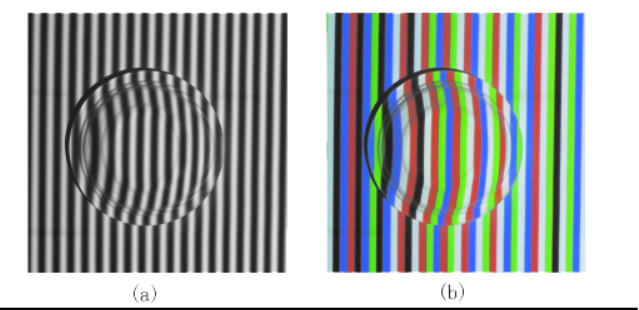

It projects a known pattern onto an object (pattern projection) and uses signal processing to derive the distance between the camera and the object from the way it is distorted.

If you project a pattern of stripes onto an object like a helmet, you will see that the stripes are distorted depending on the height of the object. By reading and analyzing such distortions, the three-dimensional shape of the helmet can be determined.

The video below also explains how the iPhone FaceID works. It uses infrared light to emit a pattern all over the face, and is able to read the exact shape of the face. Since it sees the unevenness, it is difficult to fool FaceID, which makes the security stronger.

Compared to LiDAR, the components are simpler, so the price can be reduced.

Very high accuracy can be obtained for indoor use (mm~um accuracy).

On the other hand, outdoor use with a lot of external disturbance is difficult.

There are also many approaches that use both pattern projection and stereo vision.

Ensenso, a high-precision 3D camera often used in picking robots, provides both pattern projection and stereo vision. The advantage of this camera is that it can obtain a clean point cloud even for objects that are difficult to match, which stereo vision is not good at (for example, flat walls).

Products

iPhone

! Image result for iphone face id

FaceID is an active projection camera that is used for facial recognition on the iPhone.

Every time FaceID is used, the iPhone emits an infrared pattern.

In fact, the original technology evolved from Apple's acquisition of the company (PrimeSense) that developed the Microsoft KinectV1. It's called MiniKinect by those who know it. LOL.

Industrial Products (Ensenso, Keyence)

How to reconstruct objects with an Ensenso 3D camera and data comparison using HALCON image processing: 3D image processing detects irregularities or minimal deviations that are not even visible to human eyes. #demo #objectverification #3Dvision #qualitycontrol #industry40 pic.twitter.com/3p4EEurOUW

— IDS Imaging Development Systems GmbH (@IDS_Imaging) 2018年8月9日

There aren't many examples of industrial sensor outputs on the net, but they are mm-accurate and incomparable to Realsense (which is over-spec for hobby use).

They cost several million yen each.

They are often used for inspection in factories and for robotic products. If you go to an industrial exhibition, you'll often see one of these attached to a product, so look for it.

On the other hand, the projection can only be accurately read within a few meters, making it difficult to use outdoors. Basically, it is for indoor use.

- Keyence 3D Camera, Ensenso 3D cameras

Time of Flight LiDAR

How Time of Flight works

Camera-based distance sensors (stereo cameras, projections) and LiDAR are fundamentally different in principle.

On the one hand, LiDAR measures distance based on Time of Flight.

The principle is simple: a laser beam is emitted from the enclosure as shown in the figure below, and the time it takes for the laser to be reflected back to the object is measured. If the laser beam returns after 10 seconds and the speed of light is 1m/s for simplicity, the distance to the object is

(10s * 1m/s)/2 = 5m

and we know that the object is 5 meters away. This method of deriving the distance based on the time of flight is called Time of Flight.

Since the actual speed of light is very fast, 108m/s, the time it takes for the light to return is on the order of a few picoseconds or nanoseconds, so the circuit used to measure the time needs to be highly accurate.

The type of LiDAR which directly measures the return time of the laser pulse is also called a direct time of flight sensor. FIY, The Lidar in iPad Pro and iPhone is direct time of flight.

https://tech.nikkeibp.co.jp/atcl/nxt/column/18/00001/02023/?ST=nnm

Features

The most important features of LiDAR are

- High accuracy

- Long range

- Resistant to external disturbance (can be used outdoors)

- High price

High cost Since the distance is derived directly from the return time of a strong pulsed laser beam, it is difficult to introduce errors and is highly reliable. Since these features are difficult to achieve with a camera-based system, LiDAR is expected to be used mainly for detecting distant objects in automated driving, which is required to operate even in harsh conditions.

On the other hand, the cost of LiDAR is several to dozens of times higher than that of the camera type because it requires a scanning mechanism, laser emitter, laser receiver, and many other specialized elements.

Recently, Livox and other LiDARs costing less than $1k have begun to be mass-produced and used in many robots, so the days of being shunned because of their high price may be coming to an end.

Livox全ラインナップに対応した低速(<30km/h)Slamを公開しました。先日公開した高速SLAMはHorizonのIMU情報を使っていますが、本プログラムは点群だけで計算する汎用型です。低速AGV、マッピング、AR、VR等の開発にご利用ください。https://t.co/LKLGZYPTYA pic.twitter.com/zTnn2wYbZP

— Livox_JAPAN (@LivoxTech_JP) 2020年6月29日

The greatest advantage of LiDAR is its ability to scan a fine point cloud over a long distance (100-200m) as shown in this figure.

LiDAR is the only distance sensor that can obtain a perfect point cloud over a long distance in the open air. This is why LiDAR is expected to be very popular in automated driving. (Although some people, such as Tesla, say they do not need LiDAR...)

Scanning LiDARs

It is possible to obtain the distance per pixel using the above-mentioned time of flight principle of LiDAR. But how can we get the distance information as a picture? To do this, LiDAR has a concept of scanning.

One approach is to use a mirror to scan (scan) the emitted laser beam.

The animation above is a clear representation of LiDAR scanning using a mirror. By rotating the mirror, the laser beam is scanned 360 degrees to obtain information on the entire surrounding environment.

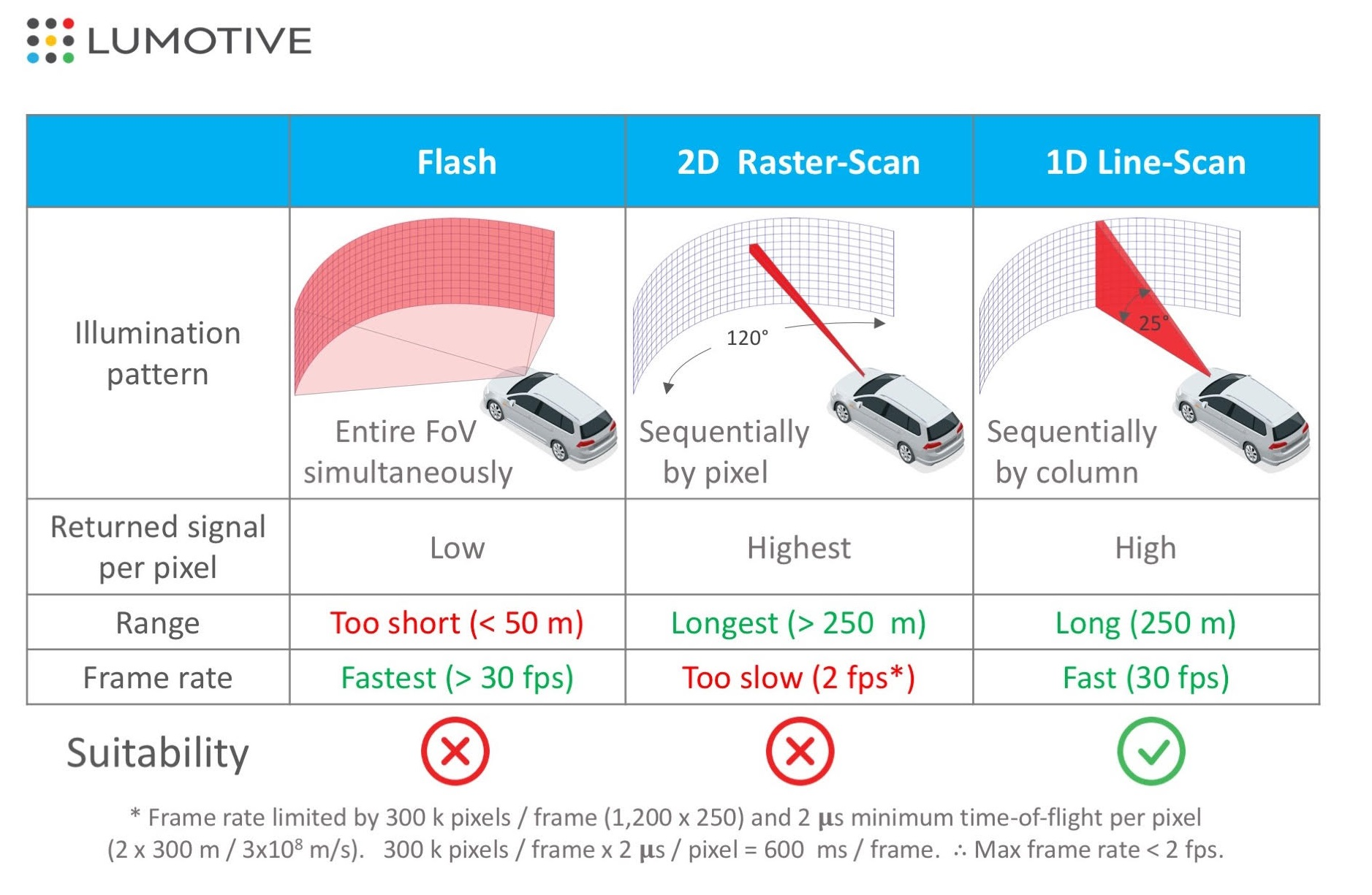

A 2D raster scan is a method of scanning two-dimensionally while acquiring information one pixel at a time, while a 1D scan is a method of scanning horizontally while acquiring vertical pixels all at once. Both methods produce distance, but the 1D scan method is more likely to achieve a higher FPS because of the shorter time required to obtain a distance image.

On the other hand, using a mirror (like a single-lens reflex camera) will increase the size of the LiDAR housing. For this reason, MEMS mirrors are being used instead of mirrors, and Optical Phased Array LiDAR, which scans optically, is being actively developed, and it is expected that LiDAR will become smaller and less expensive in the future.

Flash LiDARs

LiDAR has also been developed to acquire two-dimensional pixel information at once, just like an image sensor of a camera, without scanning. This type of LiDAR is structurally very simple, as it only emits a laser beam that covers the entire image sensor and receives the light. Therefore, it can be realized at a lower cost than the scanning type.

On the other hand, there are several issues - Short distance due to low laser power per pixel - Susceptible to external disturbance - Susceptible to interference and noise Therefore, it is not suitable for automatic driving, but it may be useful for indoor robots.

Products

Velodyne Series

The most famous ToF LiDAR products are probably those of Velodyne, the first LiDAR manufacturer to introduce LiDAR products to the world and still the reigning "king".

Their products are notorious for being very expensive, ranging from hundreds of thousands to millions, but the quality is top-notch, and the only self-driving cars that don't use Velodyne are Tesla and Waymo (Waymo uses its own LiDAR). I also imagine that Velo is often used in robotics R&D because of its high pixel count and accuracy.

Livox Horizon

Livox, a subsidiary of DJI, has released a LiDAR that achieves high accuracy while costing less than $1k, and this is becoming the mainstream for robotics projects.

You can buy them in amazon as well..

The quality is great, and the SDK is available on github, so it's easy to develop.

iPhone

The front of the iPhone has actually been equipped with ToF LiDAR (one pixel) for quite some time.

It does not do any scanning, so it is probably low cost.

The front of the iPhone has actually been equipped with ToF LiDAR (one pixel) for quite some time.

It does not do any scanning, so it is probably low cost.

The Proximity Sensor in the image is the same. When you make a call, if you bring the iPhone screen close to your face, the screen will automatically turn off. I think this is because it senses the distance between your face and the iPhone.

Note: iPhone and iPad Pro are now equipped with dToF LiDAR.

iToF LiDAR

This technology is used in Azure Kinect and other applications.